Testing Quantization of Image Classifiers

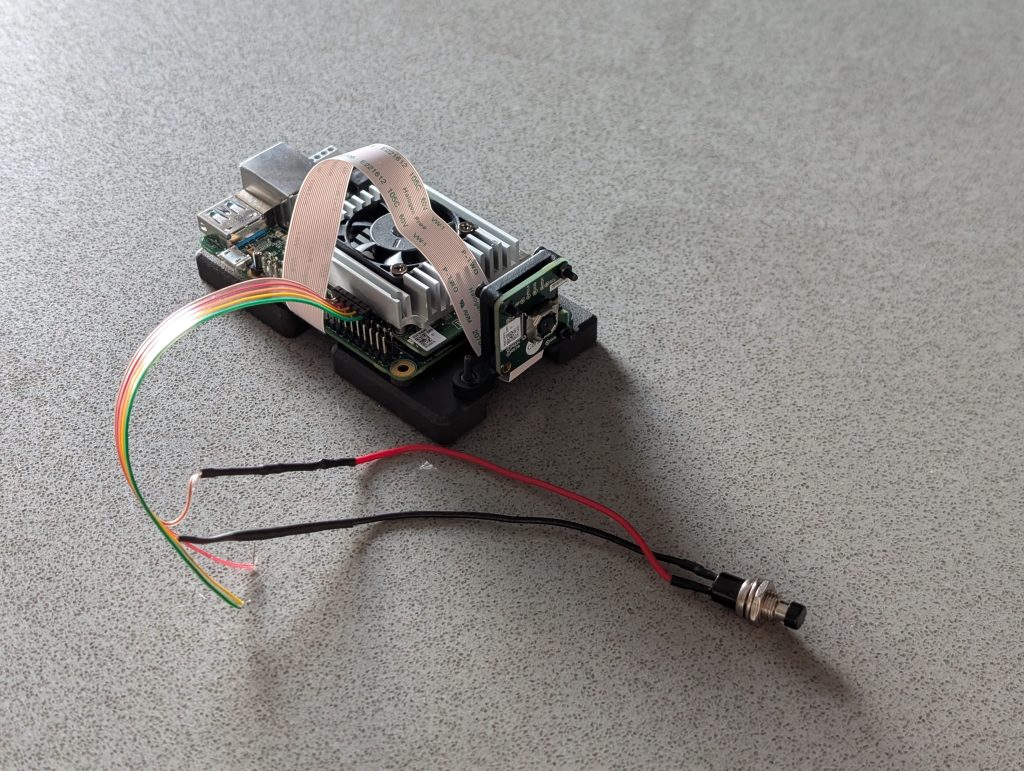

“AI” models tend to be slow. This is generally inevitable to a large degree, lots of calculations to do means more time to run. Simply throwing more compute at the problem is one solution, but limits the ability to run models “at the edge” (ie on a phone) and raises the cost. Another solution is […]

Testing Quantization of Image Classifiers Read More »