For a model, metrics like MAE and RMSE and so on quantify how accurate a prediction is. However, the vast majority of metrics are symmetric, meaning they care equally about overestimates and underestimates.

So far, I have found really one relatively common way for caring about direction: Mean Logarithmic Error (MLE). I also created the logical opposite, inverse MLE (iMLE). Straight from my AutoTS documentation: MLE penalizes an under-predicted forecast greater than an over-predicted forecast. iMLE is the inverse, and penalizes an over-prediction more.

full_errors = forecast - actual

full_mae_errors = np.abs(full_errors)

le = np.log1p(full_mae_errors)

MLE = np.nanmean(np.where(full_errors > 0, le, full_mae_errors), axis=0)

iMLE = np.nanmean(np.where(-full_errors > 0, le, full_mae_errors), axis=0)The basic purpose of this forecast is to give a big penalty for crossing the boundary, and only a small penalty for errors in the desired direction. Every approach comes down to a where statement (either in Python’s Numpy or Tensorflow).

But it came up today the point that sometimes you want a threshold first. So you don’t mind less than a 10% overestimate too much, but anything greater than that you really want to avoid.

One way to add a threshold would be to replace the > 0 argument in the function above with an array of threshold values.

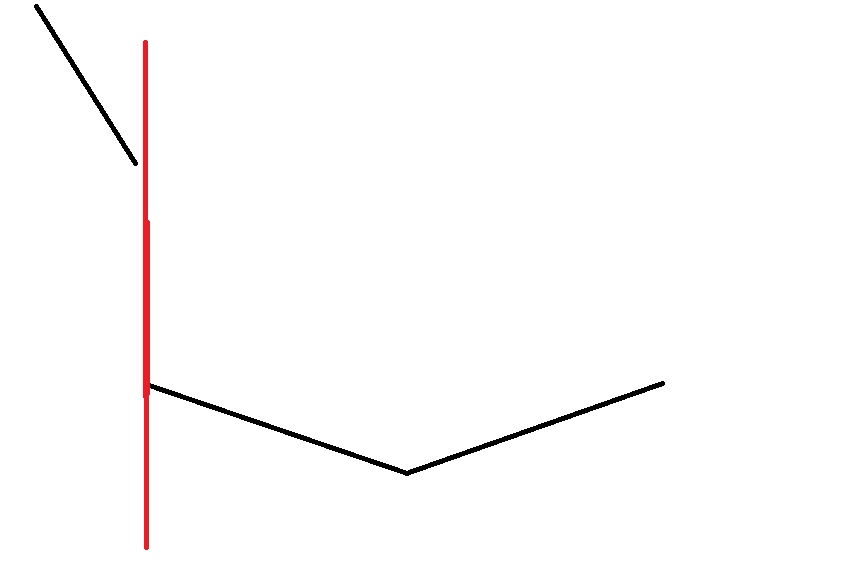

However, inspired by Pinball/Quantile loss, I wanted a function that could handle either direction, adjusted solely by the input threshold. Thus, we have the threshold_loss function.

def threshold_loss(actual, forecast, threshold, penalty_threshold=None):

"""Run once for overestimate then again for underestimate. Add both for combined view.

From Colin Catlin at syllepsis.live

Args:

actual/forecast: 2D wide style data DataFrame or np.array

threshold: (0, 2), 0.9 (penalize 10% and greater underestimates) and 1.1 (penalize overestimate over 10%)

penalty_threshold: defaults to same as threshold, adjust strength of penalty

"""

if penalty_threshold is None:

penalty_threshold = threshold

actual_threshold = actual * threshold

abs_err = abs(actual - forecast)

ls = np.where(actual_threshold >= forecast, (1 / penalty_threshold) * abs_err, penalty_threshold * abs_err)

return np.nanmean(ls, axis=0)

As written, this is essentially an adjusted MAE. However it would be easy enough to replace abs_err with sqr_err or some such.

Accordingly, when threshold is < 1, a larger value (more bad) represents more underestimates crossing the minimum tolerance threshold. When threshold is > 1, a larger value (more bad) represents more overestimates above threshold. In both cases, lower scores will represent a better forecast (lower MAE) and/or better conformance to the threshold.

If you only care about threshold crossing and not forecast accuracy inside the bounds, then none of these metrics are needed, and a simple classification type metric (1 for inside bounds, 0 for outside) would work quite well.

# returns % inside bounds for wide style data (records/dates * features/time series)

np.count_nonzero(

(upper_bound >= forecast) & (lower_bound <= forecast), axis=0

) / forecast.shape[0]