As part of my time series forecasting package, I have invented my own algorithm. Which sounds really cool, but in reality isn’t that special. The reason it isn’t that special is it is just a combination of existing ideas applied together to this specific domain. On the positive side, the method isn’t really that difficult to explain, so let me walk you through it.

Time series forecasting is all about predicting the future. The future is inherently unknowable. There will always be uncertainty and error – there is never a ‘right’ answer. This makes the domain rather uncomfortable, and avoided by many. Yet here we start with the same thing we always do, data.

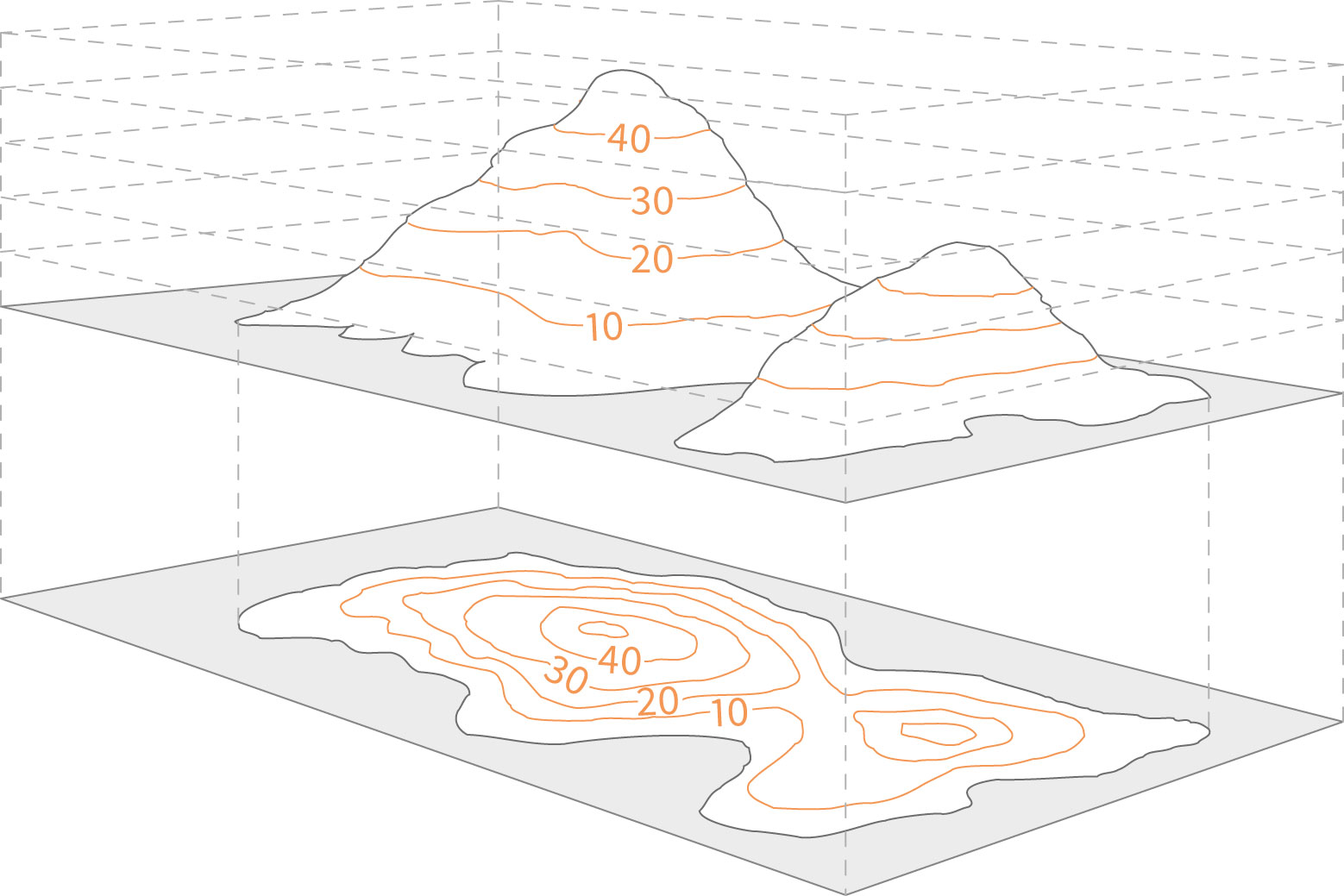

We start in this method by selecting the most recent section of the historic data available. This is a motif or phrase of fixed length, which I call the last_motif. The goal then is simple, to find past motifs in the historic data and compare them to the last_motif. The hypothesis is that those periods of the data most similar to the last_motif will have similar behavior following, which can be used to forecast. This is essentially a specialized version of clustering.

Take an example. We will take the last_motif – the stock market’s most recent, say, five days. All five days see a close lower than the previous day. We then look back at all historic data and try to find other times where five days in a row the market went down. Since those are pretty rare, we also look at other times where four of five days all went down and other similar times, although we assign these times a slightly lower importance as they aren’t as close a match. The way the similarity is actually computed is using a pairwise metric like cosine similarity. We then take say, the top 20 closely related series, and take the data that follows them. In this case, we apply the day by day percent change to the end of our most recent data, thus simulating 20 possibilities of where the forecast is most likely to go based on the most closely related historic data. The average of these 20 possibilities becomes our forecast, with the upper and lower bounds also constructed from the distribution of the simulation.

Behind the scenes here there are nine parameters than can greatly effect how well this forecast works. For example, we could compare 10 day motifs instead of 5 day motifs. Instead of comparing the percent change (I am a fan of this, what I call ‘contoured’ forecasts, rather than the raw magnitude most people use), we could compare the raw magnitude of the forecast. Different distance metrics can be used. One interesting option is the ability to share or not share the data among multiple time series, either comparing the one series only to itself or with its many kin. You can check out the code currently here, at the end of the basics.py file in AutoTS.

Here is the cool thing: in my testing is has outperformed many existing models in some circumstances. It handily destroys ARIMA models. On weekly EIA (petroleum) data, one variation of my model outperformed a thousand other models on cross validated accuracy over the last three years. In my testing so far, it has been among the top models for most of the different datasets. It isn’t usually the best, no one model ever is. The satisfying thing is how well the model generalizes to novel types of data, where as many existing models were often designed with one kind of data (daily, monthly, not hourly or yearly) in mind. I made something up, cobbled from a collection of random ideas, and it works! That feels good.

The model does suffer a number of drawbacks. Firstly, it is rather slow. This is largely due to the model being thrown together by me and not being optimized as well as established models built in other packages. Secondly, quite a number of different model combinations need to be tried in order to reach the optimal one for a situation. This is largely because I have not yet become clear on what the best parameters are overall, and thus have not yet optimized the defaults.

Really interesting!

how do you deal with the case when the classification algorithm cannot find similar previous motifs?

I guess that may affect the confidence interval too.

Great idea that you can look for motifs in other related time series!

I’ll be looking into testing this, nice work!

Probably best asking questions on GitHub discussions for AutoTS if you want a speedy reply.

It always finds similar motifs if there is any history at all – it finds the K best of those available. Now, if the history isn’t very related, then those paths will be lower quality and it will be a low quality forecast.

Since this was written I’ve also added a SeasonalityMotif (on fouriers of datetime of records) and MetricMotif (faster version, integrated preprocessing)