In the world of forecasting there is a bit of mystery. Many expert forecasters continue to use statistical models despite the strong push of machine learning (ML) and deep learning neural network (NN) models. Those less familiar with the world of forecasting assume, because of the dominance of ML/NN models in computer vision and language worlds, that ML/NN dominance must be the same here, and indeed these same people often run tests that show ML/NN models beating some statistical model, like an ARIMA 011, posting these on Medium, and indeed, in plenty of scientific papers. Certainly, ML and NN models can forecast well sometimes, but as my testing with AutoTS shows, where deep searches of all model types with many parameters are run, there really isn’t a clear victory for the ‘new’ ML/NN models, and indeed often (not always) statistical or even naive models are superior.

So what’s going on? Why do we see leading results for neural networks and machine learning in many published results, and yet often see doubt against those results as well?

I have an explanation: data preprocessing and transformation.

Something I am quite clearly seeing is that statistical models tend to be very dependent on external data processing by transformations, whereas ML/NN models often can perform quite well without much of any pre/postprocessing, doing well on raw, messy data.

Transformers, no relation to the transformer architecture of neural nets (nor to robot car movies), is the name in AutoTS for data processing classes using the scikit-learn style. Transformers are actually a huge bucket of things in AutoTS, compromising some 50 different methods, some with quite a lot of parameter options (and some overlap). It can include filtering (removing anomalies, or high frequency components), decomposition (breaking out seasonality from trend), postprocessing (rounding, aligning values), data scaling, and more.

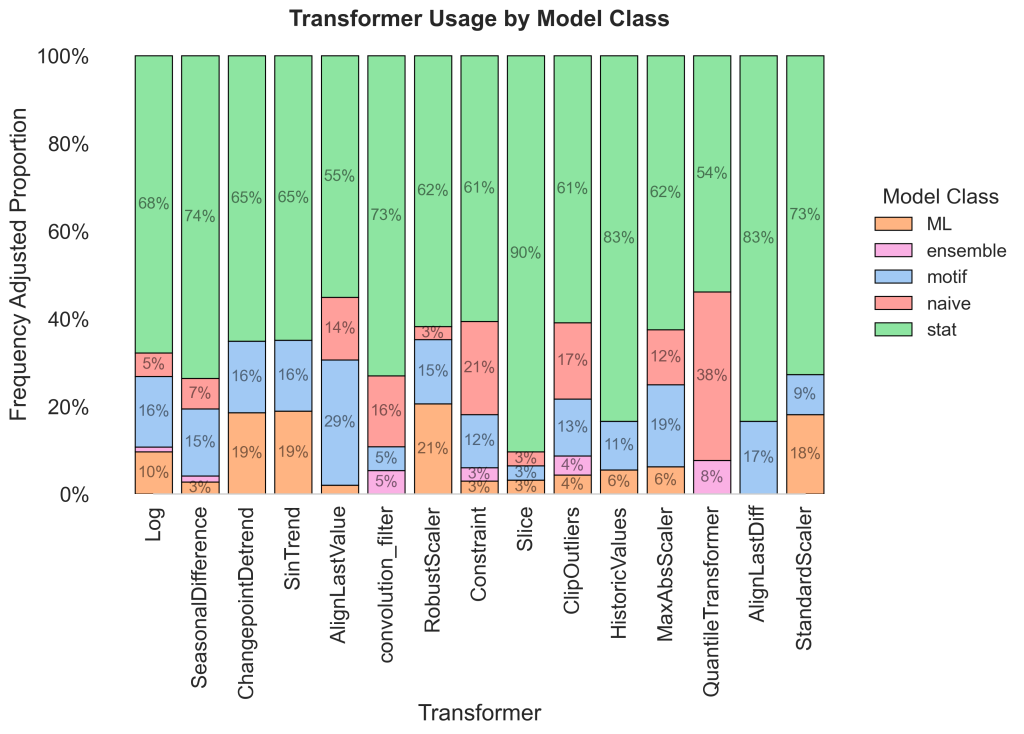

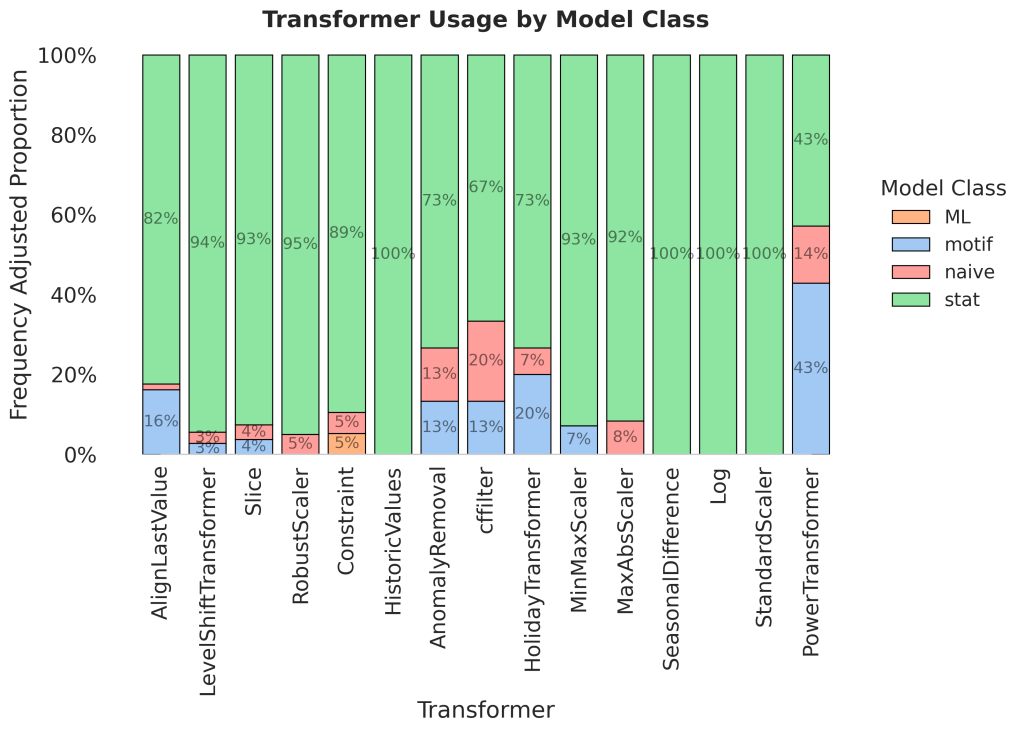

These graphs look at the best performing models from extensive model searches. Then they look at what transformers were most used by the top models, figure out the use of these transformers by model type, normalized to account for frequency of the selection of that model type. They paint a pretty clear picture. Statistical models dominate the use of these transformers. Other model types do improve using these data transformations, but with nowhere near the dependency that statistical models do.

These results are pretty clear, if not detailed. Statistical models thrive with data preprocessing, other models don’t rely on them as much.

And this explains many of the results. Most beginners don’t know to use, or how to use, these data tools, and so tend to see better results with ML. Expert researchers, when running giant benchmarks, simply don’t have the time to tune their data preparation for every possible input series, and will often just use the same approach on every model they test, usually an approach that is fine tuned on whatever they are selling in their paper.

The ability to search for data transformations and models at the same time in one space is one of the best reasons to use AutoTS and why it can deliver good performance across many datasets.

My definitions of model types/classes

- Statistical and Linear Models

- Linear and statistical models are much more explainable, good with short data and the longest existing model class

- Machine Learning (LightGBM) Models

- These are the winners of most demand forecasting right now. Much more flexible and capable of complexity generally that statistical models.

- Deep Learning Models

- A type of ML model, but for our sake here we will say this is the “maximal complexity” approach, requiring lots of data. Have been around for a while, but particular interest now in their (theoretical) ability to “learn all time series ever” in a single super model that can predict everything (like an LLM with language).

These are of course large generalizations, there are lots of overlaps between the groups. I often group together ML and deep learning as they are generally fairly similar in practice here (big, lots of params, fit with lots of complexity). Naive is broken out, these very simple models, with lots of preprocessing, can become rather like statistical or linear models though. Finally motifs are a major grouping in AutoTS, hence called out separately, and are a more “directly data based” methodology than statistical or model based approach. Most of these motifs have an internal call to preprocessing with parameters that is not shown, and so the graphs above underestimate their “true” usage of transformers, they thrive with transformers as well.

Colin Catlin, 2025