This book, Algorithms to Live By: What Computers Can Teach Us About Solving Human Problems, came to me via Amazon’s recommendation system and sold by being well-reviewed. The book’s premise is simple: take algorithms which are critical in various aspects of computing, explain them, and then connect them to human problems (such as parking, scheduling, marriage, and so on).

Coming into this book, I was a bit skeptical about how well it would actually be able to fulfill on its promise. I assumed it might be either too dumbed-down or too lost in technical details without delivering the connection to the real world. Yet the book actually did quite a remarkable job of introducing technical concepts (with essentially no math) in a fun and interesting way. Many things could have been done better, but this book was still far better than anything else I have before which had a similar goal of merging together ‘math’ and ‘how to live your life’ recommendations.

Of course, the general difficulties on connecting ‘math’ to practical advice has more to do with math not nearly being as perfect as mathematicians always assume. I hate to break it to you, but math is not the ultimate reasoning of the universe, it is simply one way that humans have come up with of trying to structure our knowledge of the universe’s patterns. I have always preferred evolutionary biology explanations and solutions to human problems because such explanations provide a complete story with a clear ‘why.’

Luckily, computer scientists, who have spent large amounts of time fitting mathematics into practical use, seem to have some useful knowledge to impart. Indeed, the authors show their divergence from pure math with their value of randomness, simple heuristics, and approximation greatly over more definitive solutions.

The topics in Algorithms to Live By were various, but there was a strong bias towards computer resource management. Indeed, one of my greater disappointments was that the chapter on ‘networks’ included absolutely nothing on network analysis, and only discussed network traffic management.

| Opinion | Chapters/Subjects |

| Liked and Saw Strong Connection | randomness, explore/exploit tradeoffs, optimal stopping, Bayes’s rule |

| Liked but Didn’t See Strong Connection | caching, sorting, and scheduling |

| Didn’t Like (usually because of weak connection) | relaxation, networks, game theory, overfitting |

Even those topics which I was already familiar with were interesting and useful to hear from another perspective – like the Bayes’ Rule chapter. I also particularly liked caching, sorting, and scheduling because those are topics I have some offhand knowledge of, but which the additional knowledge will probably be of practical benefit to me.

Yet those topics where I said there was a ‘weak connection’ were those where the authors practical applications were more… philosophical. Game Theory was a great example of this weakness. The message was: many games/situations have equilibrium/dominant strategies that are not ideal for the participants. Solution: it’s possible to change the game/situation to one where the rules favor general benefit for the participants (ie. add ‘The Godfather’ as an outside pressure to stay silent, to make the Prisoner’s Dilemma most beneficial for the prisoners). I found this a very interesting presentation, and food for thought. But in real life, what’s the difference? Be more flexible and thoughtful in how you run things, I guess.

One thing that can be said about this book is that it is optimistic about human nature. It generally argues that humans are fully capable of doing things quite well (we aren’t innately flawed, and in fact, flaws like our poor memory, it is actually optimal memory for the world we live in). It also presents hope, with constraint relaxation and game theory subjects showing how many situations can be improved by reframing them in strategic ways. Maybe it’s just me, but are computer scientists in general rather upbeat people?

Let me say two quick things about the book before I wander too much more:

- It has very good notes, bibliography, and index. Indeed, I wish most academic books were put together was well as this was.

- The conclusion sucked. It reads exactly like the kind of conclusion I would write moments before an essay is due/exam time is up, and I just need to say all the expected things quickly. I wanted something more visionary, and I got a quick recap instead.

Which generally wraps up my opinions on the book itself: that it was very interesting and engaging, but not an absolute knock-out. It felt like a good philosophy treatise, of the early 21st century nerdom school of reasoning.

So then, what did I learn?

Leaving aside the interesting nerdy tidbits I picked up, I would say here’s what I learned.

Humans Don’t Explore Enough:

This closely parallels an article I saw this week in the journal Science titled “When Persistence Doesn’t Pay.” The general premise is that humans tend to overvalue the sunk cost of past operations, while failing to explore and try new options as often as they should. In the Science article, it was that humans basically stick with binge watching a mediocre show rather than trying a new one. In the Algorithms book, the considerations were more gamblers waiting too long to gather information, remaining too uncertain, or conversely hiring mangers choosing the secretary too soon in the secretary problem. From an evolutionary sense, it would seem to suggest humans evolved in a far more uncertain and unstable world – where clinging to any survivable option was generally the better strategy than trying elsewhere.

Which means I should take up on my job opportunities to move out, rather than staying the safe Minneapolis area? See, here the problem is more complicated, in that I know from various metrics that the Twin Cities are one of the best places for someone like me (fit, lots of parks, good economy, and so on), and I also have a huge depth of local knowledge (sunk cost) and the allure of being relatively close to the farm (which I love) and my parents (emergency aid source). I have no idea what I will do, yet.

Randomness and Optimization

You know that I complained earlier about mathematicians as being too ordered? Well, randomness is far more important in our world than most people accept. It only takes a few coincidences in life to convince most people that a god is real, when the probability behind such coincidences being random chance are high, very high. I mean, I love ancient Rome, but taking a compiled, edited, and translated two-thousand-year-old Judeo-Greco-Roman history text as the basic truth of reality… wow, that’s a bit much.

Instead, embracing randomness is the way to go. Indeed, the single fact driving all of evolution is the random mutation upon which selection can then occur. Instead of positing a ‘higher power,’ like a definitive algorithm, some of the most powerful machine learning methods run on random sampling (fancy name ‘Monte Carlo simulation’), and the good old guess-and-check is really how we actually fit these models to every problem (which goes under fancy names like ‘stochastic gradient descent’).

Human unwillingness to try and fail is one of the basic reasons we so blatantly refuse more ‘random’ approaches to thinking. An elaborate explanation of the world provides us with certainty, although, ironically, often an illusory one. This parallels our failure to explore other options as much as we should. That is… as much as we should to achieve an optimal account.

Indeed, one of the things I am forced to conclude is that humans do not seek optimal outcomes. Rather they go so far as to seek what they perceive as a reasonable outcome, and leave it at that. My suspicion is that the countervailing force against optimization is human collective society. If everyone in a society is seek their own, different optima, one imagines that the society in question is much more likely to fall apart. Then again, the earlier mentioned article “When Persistence Doesn’t Pay” observed similar behaviors in foraging rats and mice, so perhaps my theory about society is wrong.

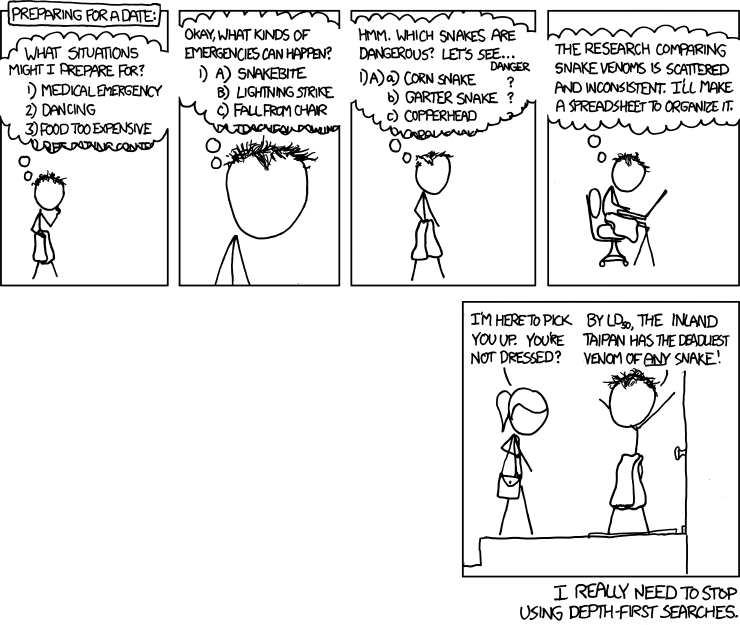

Such cognitive biases are then, by this article, suggested to be do to the difficulty of computing optima. The scene in Princess’s Bride over a debate over which cup is poisoned is exactly the sort of trouble humans have to solve (‘recursion’ as the Algorithms book calls this, so Vizzini really suffers from stack overflow), and it is basically impossible.

So what is to be done? Either change the game around (as the Man in Black does by poisoning both cups, and as suggested in the section on game theory) or to take a random guess (because that’s really all that can be done) and spare the extra energy on solving other problems. The random guess may seem like a poor option when you are challenging a Sicilian with death on the line, but most difficulties in our life allow us many attempts, so a random guess coupled with an algorithm to search for the optimal answer over time is actually quite effective.

I suppose I assume that ‘happiness’ is the metric being optimized with our lives (beyond the metric of simple ‘survival,’ which being a binary yes/no outcome can’t really be optimized on effectively). The authors here certainly have the viewpoint that humans seek to optimize happiness. And honestly, I think that is simply the fact of how humans work. We seek to be happy, although some people’s ideas differ widely on this (some being happiest with ruthless ambition, others seeking happiness through selfless assistance of others). I am quite curious as to how the neuro-chemistry of self-optimization works. I believe there is also an element of optimizing on having the most brain activity as well as a strong constraint on having ‘well-ordered’ brain activity, whatever that means. I also think the brain being random is a very important part in making proper consciousness as well – something akin to second order criticality, in which are brains power to imagine is largely triggered by ripples caused by random inputs.

Indeed, I have now determined what I would have liked to see in this book’s conclusion: a discussion of the combination of Bayesian inference, randomness, explore/exploit, optimization, and all the remaining, build to form a plan. The pattern of behavior that emerges is something like this: start with what you know (your Bayesian prior) and then move in a random, unexplored direction. Analyze your movement and compute the Bayesian posterior, but don’t overfit by reading too much into any particular event. As you explore, continue to take risks and new opportunities, but generally move in the direction of what you find you like. If you are only ‘digging the hole deeper’ then reframe the problems (try ‘relaxation,’ revising what you are looking for and crossing your boundaries a bit), or try radically new options and by pseudo-game theory, remake the situation to one with a winnable equilibrium. Remember that our body’s ‘just exploring’ stage of life, childhood, is short because life expectancies used to be much shorter – we have much longer to spend in exploring before our ‘optimal stopping’ point is reached. Managing all these options and exploration accordingly undertaken is difficult, that is where caching, scheduling, and sorting comes in – the conclusion here to me seemed that forgetting and letting things slip is okay, just focus on prioritizing what you do let slip (generally, let big, but unimportant things go first if not critical to other stages of life). Finally, simulated annealing comes in, with the optimal/happiest places being discovered, these places can now simply be enjoyed.

Oh, and just one more thing for that conclusion, the brain is a very powerful storage device, but is not very fast in searching through all the data – like a hard disk drive, not an SSD. The authors mention how utilizing simple rules, heuristics, is a very quick way of utilizing such a brain. Learn the broad strokes well, as those are what you can remember easily, rather than small details, which you can’t utilize well even if you know them.

The authors failed to mention an explicit application of ‘caching’ with our own brains to overcome the querying limitation of memory, which is to say, create a cache. This is done by using, for example, a ‘brain palace’ perhaps familiar to you from Sherlock, but dating back to ancient times and the likes of Cicero. Cicero would remember items in his speeches based off connections throughout a walk through of his home, thus a mental image of his home stored his access to the appropriate thoughts, which was emptied and refilled for each new speech. I wonder if this goes even further towards arguing for more exploration. After all, you can learn the high-level details (the things you will really be able to use well) pretty quickly, so it’s better to focus more on exploring, rather than developing an already established skillset.

Ultimately, I wonder if maybe a comic would present this information far more effectively for the masses. Here’s an example:

I thought it would be useful for me to run through some of my own life considerations in the context of what is recommended in Algorithms to Live By.

So what changes do I make to my life as a result of this?

I think one thing that is very clear is that I am still very strongly in the “explore” portion of my life.

Do I keep playing classical guitar?

I have estimated the sunk cost of my musical education to be a shockingly huge figure, something over $10,000. That alone would make my parents order me to keep playing for life – they paid most of that after all. But they tend to think far more about sunk costs than, is proven, you should… I, on the other hand, feel like guitar is lots of work (many months for each piece) with relatively little objective (I have no intention of performing regularly), so why keep going? Well, I do like playing, so my response so far has been to keep playing, but as a low priority item. But what do really do long term? Right now, I am thinking of taking up a different type of guitar playing – exploring playing metal on an electric guitar perhaps? Thus, focus still on the ‘exploration’ stage of my guitar relationship. This is a great solution, but I’m a bit nervous about such an undertaking, even though I am a great fan of power metal.

Do I move to work in another city?

I already brought this up in the original article, and it is a question of preponderance in my mind. The obvious cons are: not utilizing my large collection (sunk cost) of local knowledge, local friends, and local support network and not being able to access my family’s farm routinely. Here, I think the suggested explore/exploit answer is an overwhelming YOU MUST MOVE. I have never lived in another city, so I really should get around to exploring what that is like. That said, I shouldn’t immediately dedicate myself to living there too long, as more exploration might still be useful. Constraint relaxation might also come to play here as well as my standards are all set by my experience in MSP, and might not be comprehensive, so I should consider options I might not otherwise here.

Where do I end with my hobbies?

I have far more hobbies than most people, if you hadn’t figured that out from my blog already (and I should note that many of my hobbies aren’t yet mentioned on this blog). I feel overwhelmed juggling them all. Here, again, I need to get over the sunk costs – it is okay to follow the algorithm and move towards another option while forgetting past ones. I think dancing is going to fall into this bin. I love dancing, but I just find the social complications to be more trouble than I prefer for activities. So farewell argentine tango and swing, I wish I had gotten to know you better… But maybe I will, someday, find a different environment in which to approach those again. It also means I should at least try HEMA, as I’ve always meant to…